Poker adalah salah satu permainan kartu paling populer di dunia, dan kehadiran platform online membuatnya semakin … Panduan Lengkap Judi Poker Online: Hal-Hal yang Wajib Diketahui Setiap Pemain PokerRead more

Dari Bluffing ke Cuan: Seni Menipu di Dunia Poker Online

Dalam dunia poker online, semua orang bisa dapat kartu bagus atau jelek. Tapi tidak semua orang … Dari Bluffing ke Cuan: Seni Menipu di Dunia Poker OnlineRead more

Poker Online: Strategi Jitu Biar Gak Cuma Jadi Penggembira Meja

Poker online bukan cuma permainan kartu biasa. Di balik layar yang terlihat sederhana, ada intrik, psikologi, … Poker Online: Strategi Jitu Biar Gak Cuma Jadi Penggembira MejaRead more

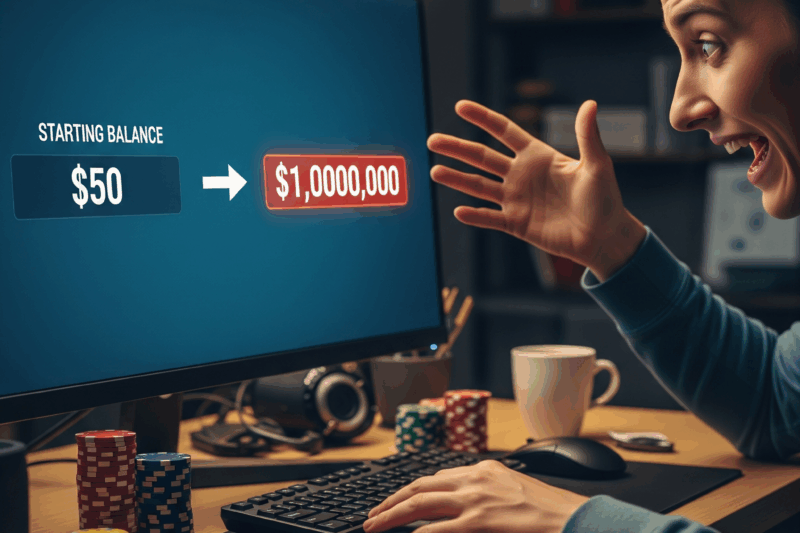

Modal Receh, Pulang Bawa Banyak! Poker Online Bikin Kaya Mendadak!

1. Pembuka: Dari Receh ke Rejeki Kilat Poker online dulu dianggap cuma permainan kartu biasa. Namun … Modal Receh, Pulang Bawa Banyak! Poker Online Bikin Kaya Mendadak!Read more

Gertak Dikit, Duit Datang! Poker Online Emang Gak Main-Main!

Poker online bukan sekadar permainan kartu biasa. Di balik layarnya yang digital, tersimpan dinamika yang kompleks, … Gertak Dikit, Duit Datang! Poker Online Emang Gak Main-Main!Read more

Rahasia Profesional: Cara Membaca Lawan di Poker Online

Pendahuluan Poker bukan hanya sekadar permainan kartu, tetapi juga permainan psikologi, strategi, dan analisis perilaku lawan. … Rahasia Profesional: Cara Membaca Lawan di Poker OnlineRead more

5 Kesalahan Fatal yang Harus Dihindari Saat Main Poker Online

Pendahuluan Poker online adalah salah satu permainan kartu yang paling populer di dunia digital. Tidak hanya … 5 Kesalahan Fatal yang Harus Dihindari Saat Main Poker OnlineRead more

Strategi Jitu Menang Poker Online: Dari Bluffing hingga All-In

Pendahuluan Poker online telah menjadi salah satu permainan kartu paling populer di dunia. Dengan perkembangan teknologi, … Strategi Jitu Menang Poker Online: Dari Bluffing hingga All-InRead more

Panduan Lengkap Bermain Poker Online untuk Pemula

Pendahuluan Poker adalah salah satu permainan kartu paling populer di dunia. Permainan ini menggabungkan strategi, keberuntungan, … Panduan Lengkap Bermain Poker Online untuk PemulaRead more